The World of Containers: Introduction to Docker

Software projects nowadays require much more than just writing code. Different programming languages, frameworks and architectures increase the complexity of projects. Docker provides applications with all their dependencies as packages in so-called "images" and thus enables workflows to be simplified. This article is intended to serve as an introduction to the topic and to give you an overview about the basic concepts of Docker.

Why Docker?

In the past, local application testing went something like this: a developer wants to test his colleague's application. To do this, he received instructions from her with requirements, in which, for example, was described which operating system, which programs in which versions or which database is required. The testing was quite tedious for both parties because it required a local development environment to be set up.

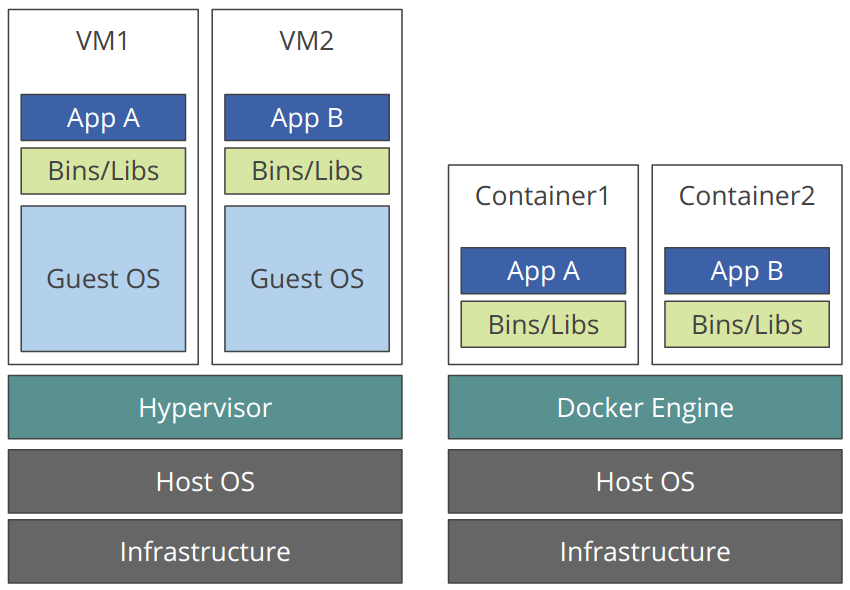

With the introduction of virtual machines (VMs), the whole thing has already been simplified significantly. The developer from the example was able to install the application with all of its dependencies on the VM and then hand it over to her colleague. However, a VM always needs lots of resources; and that typically meant that several applications had to share the same VM.

Basic Concepts

A Dockerfile is a file that contains the "recipe" for a Docker image: it contains all commands to build a Docker image with docker build. The following figure shows an example of a Docker file on the left. FROM defines the basis of our image: an existing Docker image. COPY copies files and directories into the file system of the image. RUN executes a command on the command line while building the image with docker build. In our example, a predefined function is called that installs an R package. CMD ultimately defines the standard behavior of the container immediately after the container has been started using docker run: in our case, an R script is executed. The options described are of course only exemplary, there is a large number of other options for Docker files.

A Docker image, on the other hand, is a build artifact. It bundles files, dependencies and programs that are required by the application. A Docker image usually consists of so-called layers: Images are based on other images and contain additional adjustments. In our example, the r-batch image is based on an ubuntu image to which an R installation and individual configurations have been added. The image can be saved in a Docker registry and distributed from there as a basis for further Docker files. Docker Hub is the standard registry for open source images; our own images can also be found there. A local installation of Docker will always search for available images in this registry when an required image can not be found locally.

The docker run command starts a container as an instance of a Docker image. If changes are made in the running container, they will not be saved after deleting the container. Such changes have to be integrated in the Docker image (i.e. in the Dockerfile, on the basis of which the new image is built) to make them available the next time the container is started. In general, containers are stateless; or rather they should be. That means that they do not save any data in the container that is relevant again at a later point in time. This allows containers to be replicated as required, i.e. to be scaled on any infrastructure. As 'the cloud' is becoming more and more relevant, this is one of the most important properties of a container.

Interaction with Container

By default, a container is isolated from other containers and the host machine. The connection to a container is possible via one or more networks, e.g. by setting a parameter for port mapping with the docker run command:

docker run -p 8080:80 my-container

The parameter -p 8080:80 specifies that port 80 of the container should be mapped to port 8080 of the host machine. For example, if a web server is running inside the container, it can now be accessed via the browser at http://localhost:8080/.

Files or directories of the host system can be made available in the container with the help of so-called volumes. A volume is a file system with which persistent data can be transported into and out of the container. For example, with

docker run -v /local/file/or/volume:/point/to/mount/within/container my-container

a file or directory can be made available in the container. However, the container itself is not a suitable place to store persistent data, because if a container is stopped or deleted, all changes and files that are not contained in the Docker image are lost.

Summary

Docker has become an essential part of our infrastructure and our data science projects. We use it for local development, testing, and as a deliverable in customer projects. In addition, we have been making use of the possibility of parallelizing processes using Docker in combination with Kubernetes for some time. In particular, we have come to appreciate the independence we have gained from operating systems and the greatly simplified transfer of code to customers over the past few years. Even if Docker requires a little training, from our point of view it makes perfect sense to invest this time in order to benefit from the advantages Docker offers in the long term.